Large Models

New age with Foundation Models

I believe we are currently experiencing the second golden age of AI research, driven by the emergence of LARGE transformers and webscale unsupervised pretraining. The first golden age was led by ImageNet supervised pretraining and CNNs. In light of this, I am motivated to work on projects that have the potential to become the cornerstone of this exciting new era in AI research.

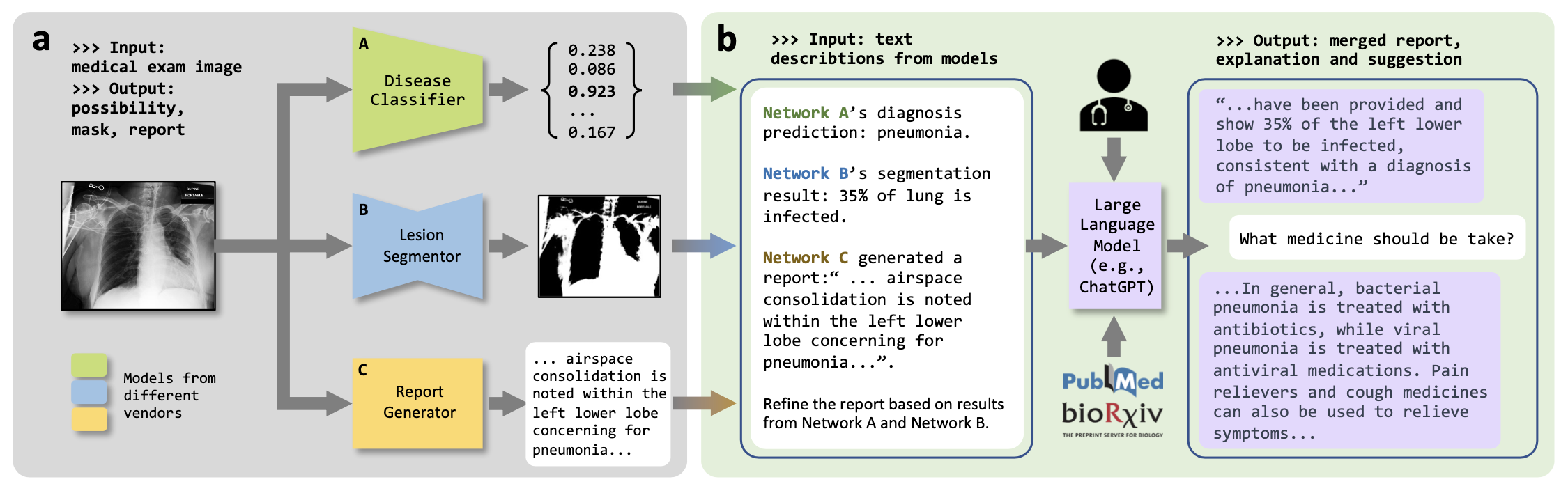

ChatCAD

We propose ChatCAD, that use trained CAD networks and use ChatGPT (or other LLMs) to merge them. To make the result more truthy, we are adding a healthcare retriever into this framework (like New Bing)  ChatCAD: Interactive Computer-Aided Diagnosis on Medical Image using Large Language Models S Wang, Z Zhao, X Ouyang, Q Wang, D Shen

ChatCAD: Interactive Computer-Aided Diagnosis on Medical Image using Large Language Models S Wang, Z Zhao, X Ouyang, Q Wang, D Shen  Our work is reported at the 78th China International Medical Equipment Fair (CMEF). We are also working on an upgrade: ChatCAD+: Towards a Universal and Reliable Interactive CAD using LLMs. This will be online for all users to try.

Our work is reported at the 78th China International Medical Equipment Fair (CMEF). We are also working on an upgrade: ChatCAD+: Towards a Universal and Reliable Interactive CAD using LLMs. This will be online for all users to try.

DoctorGLM

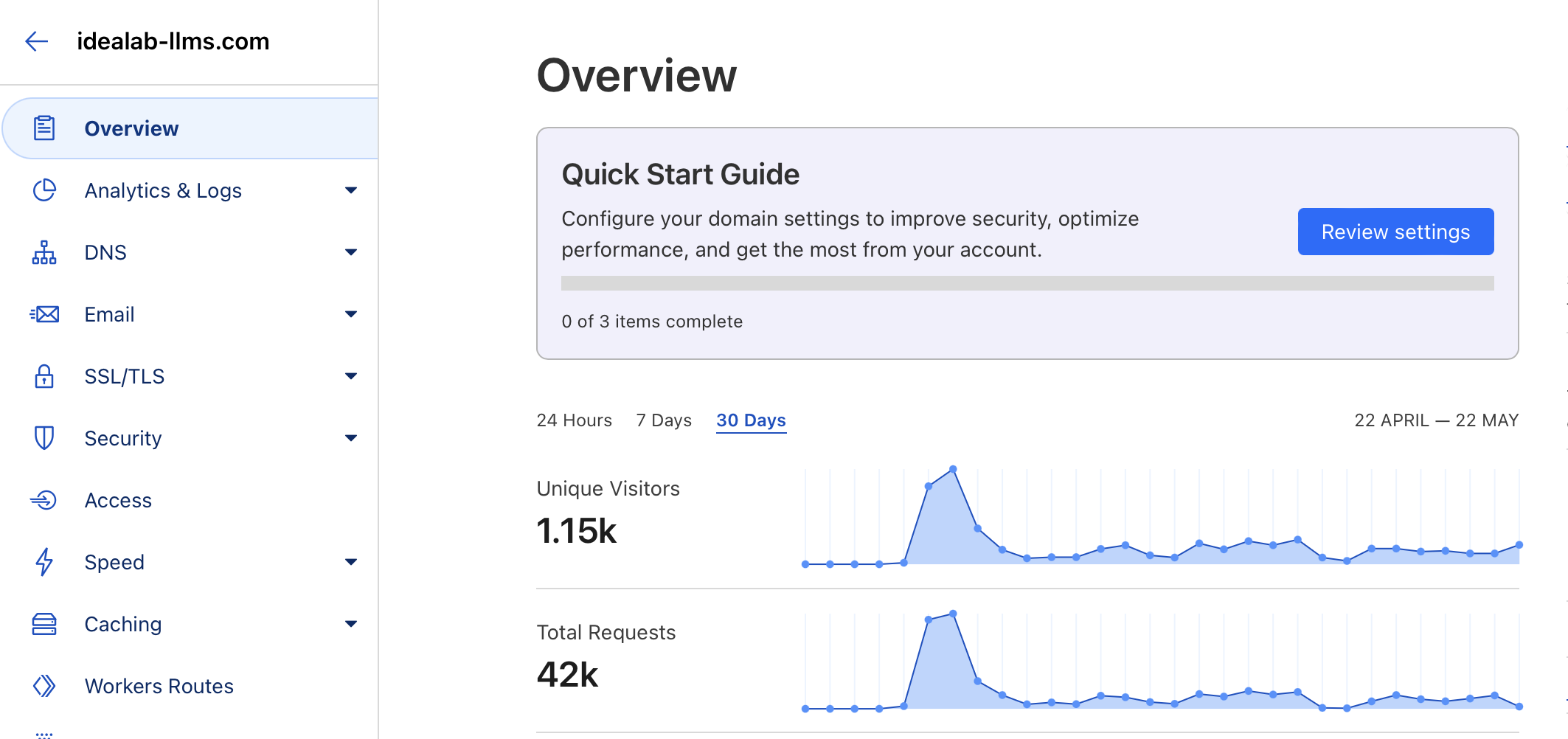

I led the team at DoctorGLM and was responsible for training and releasing the first Chinese Doctor Large Language Model on April 4, 2023. Our research focused on optimizing data blending, alignment selection, and determining the appropriate training stopping point. During the public beta phase from April 27 to May 22, according to Cloudflare, we received over 1,000 unique visitors and successfully handled more than 40,000 real user questions. [project] [code] [try it]

Medical Low-rank Adaptation

We are also working on MeLo, a medical image low-rank adaptation scheme that is to replace finetuning in medical image analysis. This is a work I really enjoy doing as a programmer and engineer. More information coming very soon.